Market research used to move in clean stages: collect data, analyze it, then turn it into a report. In 2026, those stages still exist, but generative AI is now present across the entire workflow, from planning a study to producing a client-ready deck.

This is not just a speed upgrade. It changes what researchers spend time on. Less effort goes into mechanical work like transcript cleanup, first-pass coding, and slide formatting. More effort goes into what actually makes research valuable: asking better questions, validating findings, and translating evidence into decisions people can act on.

This guide walks through how AI is influencing data collection, analysis, and reporting, with practical ways to keep outputs reliable. You will also see where BTInsights fits when you want to move faster without losing your evidence chain.

What’s different in 2026

The biggest change is that AI supports workflows, not just one-off tasks. For example, AI is also transforming how brands create content. In advertising, teams now use AI to generate ad creatives based on proven patterns, turning competitor insights into on-brand ads in minutes rather than days. Instead of prompting a chatbot for a summary and pasting it into a document, teams increasingly run end-to-end processes where AI helps organize inputs, propose structure, draft themes, quantify patterns, and shape content into report-ready outputs.

Another shift is the mix of data types. Many projects now blend interview audio, focus group recordings, survey open-ends, and quantitative tracking. AI is often used to keep these formats connected, so teams can go from raw inputs to a single storyline.

The tradeoff is that output is easier to generate, which makes it easier to publish conclusions before they are properly checked. In market research, speed only helps when it stays grounded in what people actually said and what the numbers actually show.

Where AI shows up across the market research lifecycle

A simple way to understand AI’s impact is to map it to the lifecycle: study design, data collection, analysis, and reporting.

Study design and planning

AI is often used to draft discussion guides, suggest survey question wording, and propose follow-up probes aligned to an objective. This is useful when teams need to move quickly, but it can also introduce subtle bias, like leading language or missing alternatives. The 2026 standard is to treat AI drafts as a starting point, then review for neutrality, coverage, and fit to the decision you are supporting.

Data collection and fieldwork

Transcription is no longer a bottleneck for most teams, but transcript usability still is. Researchers still need speaker labeling, readable formatting, and searchable evidence if they want to move fast from sessions to insights.

This is a natural place for AI tools such as BTInsights’ interview analysis tool. BTInsights is one of the best interview analysis tools for market researchers. It can transcribe and analyze both in-depth interviews and focus group conversations and is strongly recommended by researchers for its transcription and analysis accuracy. The practical value is not just getting text on the page, it is getting analysis-ready material that supports theme development and quote extraction without hours of manual cleanup.

On the survey side, AI is also influencing how teams manage open-ended questions, which remain one of the best ways to understand “why,” and one of the hardest parts to analyze at scale.

Analysis and synthesis

This is where generative AI is changing the daily reality of research work.

For qualitative projects, AI is commonly used to accelerate thematic analysis: clustering similar passages, proposing draft themes, highlighting recurring language, and pulling representative quotes. For surveys, AI is most commonly used to code open-ended responses into themes, then quantify how often those themes appear and how they vary by segment.

The core question in 2026 is not “Can AI help analyze?” It is “Can we trust the outputs, and can we validate them efficiently?”

Reporting and deliverables

Reporting is often where teams lose days. Even when analysis is done, turning it into a coherent narrative with polished tables and slides takes time.

In 2026, AI is used to draft report structure, generate first-pass charts and tables, and help convert findings into presentation-ready content. The strongest teams still finalize the story manually, verify every headline claim, and keep traceability from conclusions back to evidence.

How AI is transforming analysis in practice

AI can create a summary quickly. The harder part is creating analysis that holds up when stakeholders ask, “How do we know?” In 2026, good AI-assisted analysis has two defining traits: it is structured, and it is checkable.

Qualitative analysis becomes more iterative

Thematic analysis increasingly runs as an iterative loop rather than a linear process. AI lowers the cost of iteration. You can explore a draft codeframe, test whether themes hold across segments, refine definitions, then re-check the data without restarting from scratch.

This makes the codebook more important, not less. The more you rely on AI to scale coding, the more you need clear theme definitions, inclusion rules, and examples of edge cases. Without that structure, you get theme sprawl, overlapping categories, and conclusions that are hard to defend.

Interviews and focus groups: faster synthesis, stronger evidence

Many teams use AI to generate session summaries, but summaries alone are rarely enough. Decision-makers want to see how themes show up and what people actually said.

BTInsights’ interview analysis tool supports this workflow by helping teams surface themes and pull supporting quotes from transcripts. When quotes stay connected to themes, it becomes easier to validate claims and avoid overreach. It also makes reporting faster, because your evidence is already organized when you start drafting the story.

Open-ended survey responses: coding becomes quant-friendly

In 2026, open-ended coding is increasingly treated as part of quant reporting. Teams want open-ends to produce structured outputs that can be compared by segment and tracked over time.

More and more market researchers are starting to use AI tools such as BTInsights’ survey text analysis tool to help analyze open-ended survey responses. BTInsights’ AI survey open-ends coding solution is a very popular choice among market researchers. Its survey coding accuracy even exceeds human coders and allows researchers to either completely let AI generate the codes or use their own codebook.

When open-ends are coded well, they can explain the drivers behind quantitative shifts, show differences between segments, and add real-world language that improves stakeholder buy-in.

Quantitative survey analysis: faster crosstabs and faster slides

Generative AI is increasingly used to help researchers explore quantitative data faster, especially when they need to move from toplines to segment cuts and then to a deck.

We’ve started to see new AI innovations in the survey analysis space. For example, BTInsights has recently launched a very breakthrough AI survey analysis tool – PerfectSlide. It can analyze close-ended or quantitative survey data at unprecedented speed by allowing market researchers to quickly create cross-tab tables and generate client-ready PowerPoint slides with AI. In 2026, this matters because stakeholders expect faster turnaround and clearer packaging.

The AI Copilot for interview and survey analysis

Focus Groups – In-Depth Interviews – Surveys

How AI reshapes reporting in 2026

AI is making the “first draft” cheaper. That includes draft report outlines, draft insight statements, and an initial slide flow. The key is to treat AI as an accelerator, not an authority.

The evidence chain becomes the standard

A decision-ready deliverable in 2026 needs to answer three questions clearly.

First, what did we observe? This might be a theme in interviews, a pattern in open-ends, or a meaningful difference in a crosstab.

Second, how do we know? This should point to evidence, such as coded theme incidence, segment comparisons, and representative quotes.

Third, so what? This is where human judgment matters most. AI can propose implications, but researchers must decide what is credible, what is feasible, and what needs follow-up validation.

Slides get faster, expectations get higher

When slides can be generated quickly, stakeholders become less patient with vague conclusions. They ask for segment cuts, confidence in interpretation, and supporting verbatims.

This is where combining qualitative evidence with quantitative structure becomes powerful. A strong slide often includes a theme, its prevalence, how it varies by segment, and a small set of quotes that show the language behind the pattern. PerfectSlide supports the packaging side, while interview analysis and survey text analysis support the evidence side.

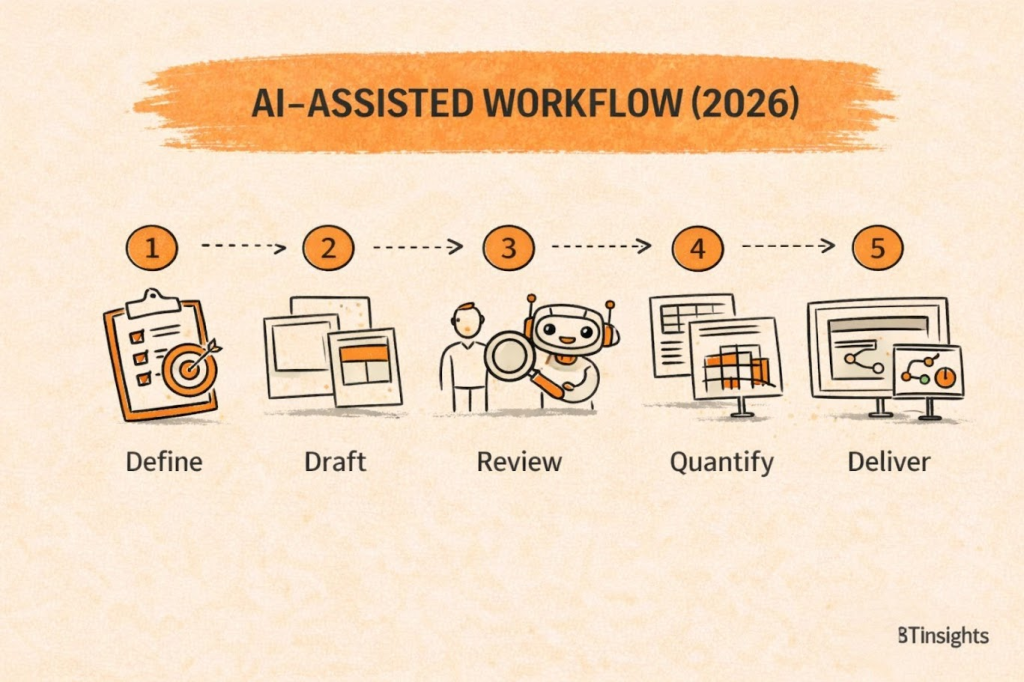

A practical AI-assisted workflow for market research in 2026

If you want the benefits of AI without the downside of fragile conclusions, use a workflow that separates speed steps from validation steps.

Step 1: Define the decision, then define the outputs

Start by writing down the decision the research must support. Then define the outputs you need to support that decision responsibly, such as a stable codebook, a theme summary with evidence, open-ended coding with incidence by segment, and a quantitative cut plan.

When outputs are defined early, AI can help you draft structure without forcing conclusions.

Step 2: Use AI to draft structure early, not the story

AI is most helpful when it creates scaffolding: draft codeframes, draft report outlines, draft tables. It is less helpful when it writes the narrative before you have checked the data. The earlier you let AI decide the story, the more likely you are to miss disconfirming evidence.

Step 3: Analyze in two passes

In the first pass, AI helps you move quickly across the full dataset, proposing themes and generating first-pass coding. In the second pass, a human reviews what matters most: ambiguous cases, key segments, and any insight likely to appear in the executive summary.

This second pass is where the work becomes reliable. It is where you tighten definitions, merge overlapping themes, and confirm that evidence truly supports each claim.

Step 4: Quantify themes, connect them to segments

For open-ends, do not stop at a theme list. Convert themes into quant-friendly outputs: incidence overall, differences by segment, and sentiment distribution where it adds interpretive value. For qualitative studies, light quantification can still help, for example tracking how often a theme appears across sessions and which segments it clusters around, while staying clear about what is directional versus representative.

Step 5: Build deliverables that stay traceable

Every headline finding should be traceable to data. In practice, this means keeping quotes, counts, and cuts close to conclusions.

BTInsights tools support this workflow across the last mile: the interview analysis tool helps keep transcripts, themes, and quotes connected; the survey text analysis tool turns open-ends into reportable codes; PerfectSlide helps move quantitative findings into client-ready slides quickly.

Reliability and validation: how to keep AI-assisted research honest

AI makes output easier to produce, so validation needs to be explicit.

A practical baseline in 2026 is sampling. Even if AI coded everything, manually review a subset, especially within high-impact segments. For qualitative work, check transcripts that strongly support a theme and transcripts that seem ambiguous. Open-ended coding, verify consistency of code application and whether theme definitions are clear enough to prevent overlap and drift.

For quantitative reporting, the classic checks still apply: base sizes, segment definitions, and sanity checks across cuts. AI can help you find patterns, but it should not replace statistical discipline.

The most common failure mode in 2026 is not an obvious mistake. It is a plausible interpretation that is not well-supported. The cure is an evidence chain that makes it easy to audit the logic from claim to data.

What to include in a 2026 market research report

A strong report in 2026 is often shorter, but more traceable.

Start with an executive summary that states the decision context and the top findings. Then organize findings by objective or by theme. For each key finding, show the evidence that supports it, whether that is incidence of coded themes, segment differences in survey results, or representative quotes from interviews.

Include a method and validation appendix that explains how themes were developed, how coding was checked, and what limitations apply. Traceability is not just for credibility, it is also how stakeholders build confidence in acting on the work.

FAQ

Will generative AI replace market researchers in 2026?

Generative AI is reshaping market research roles, but it is not replacing market researchers. In 2026, AI takes on time-heavy operational work like transcription, first-pass coding, summarization, and slide formatting. The work that matters most still sits with humans: research design, question quality, interpretation, validation, and turning findings into decision-ready recommendations. As AI speeds up analysis workflows, the value shifts toward stronger methodology, clearer logic, and better stakeholder communication.

Can AI be trusted to analyze interviews and focus groups?

AI can absolutely speed up qualitative analysis, especially for interview analysis and focus group synthesis, but trust depends on a validation process. In 2026, reliable AI-assisted qualitative research means themes are grounded in transcripts, supported by quote extraction, and checked through sampling review. The goal is to keep an evidence chain from each insight back to the original conversation, so stakeholders can see what participants actually said and why a theme was included.

How should teams use AI for open-ended survey responses?

The strongest approach is to use AI for open-ended survey analysis in a structured way: generate a first-pass codeframe, refine the codebook definitions with human review, then code responses at scale and quantify themes by incidence and segment. In 2026, teams increasingly expect open-ended coding to support quant reporting, so outputs should include theme frequency, segment cuts, and when useful, sentiment distribution across codes. This makes verbatims reportable, not just readable.

What is the biggest risk of AI-assisted reporting?

The biggest risk is overconfidence in AI-generated insights and AI-written narratives. AI can produce a polished executive summary or slide story that sounds convincing even when the underlying evidence is thin, mixed, or not representative. The fix is traceability: every headline finding should link back to supporting data such as coded theme incidence, segment comparisons, and representative quotes. In other words, AI can draft reporting outputs, but the research team must verify the logic and the evidence before anything becomes “client-ready.”

Where do teams see the biggest time savings?

In 2026, the biggest efficiency gains usually come from three places: transcript handling and qualitative synthesis, coding open-ended survey responses at scale, and quantitative survey reporting workflows like cross-tab tables and slide automation. When those steps are streamlined, teams can spend more time on deeper analysis, better segmentation, stronger validation checks, and clearer recommendations, which is where market research actually drives business decisions.

The AI Copilot for interview and survey analysis

Focus Groups – In-Depth Interviews – Surveys