Market research teams don’t struggle with data. They struggle with meaning.

You can see clicks, conversions, churn, ROAS, NPS, yet still miss the reason behind the number. The reason is usually hiding in plain sight: the words customers use in reviews, survey comments, support tickets, social posts, community threads, and sales calls.

That’s exactly where sentiment analysis comes in.

Sentiment analysis is a form of text analysis (often powered by natural language processing, or NLP) that classifies text as positive, negative, or neutral so you can quantify customer opinions at scale.

In market research analysis, it’s one of the most practical ways to turn qualitative feedback into measurable insight, especially when you want to track brand sentiment, customer sentiment, or social media sentiment across time, segments, and campaigns.

This guide will walk you through what sentiment analysis is, the main sentiment analysis types and methods, and a clear, repeatable process you can use to conduct sentiment analysis for market research, brand monitoring, and campaign optimization.

What is sentiment analysis?

Sentiment analysis (sometimes called opinion mining) is the process of automatically identifying the emotional tone behind text, usually by categorizing it as positive, negative, or neutral.

You can run sentiment analysis on almost any text stream that matters to market research:

- customer reviews and app store feedback

- open-ended survey responses (NPS/CSAT comments, exit surveys, post-purchase surveys)

- social media posts and comments

- support chats and ticket notes

- forum threads and community discussions

- competitor mentions in public channels

At first, that sounds simple. But in practice, most market research questions require more than “good vs bad.” That’s why modern customer sentiment analysis often goes beyond polarity to include sentiment scoring, aspect-based sentiment analysis, and sometimes emotion detection.

What sentiment analysis outputs

When people say they “ran sentiment analysis,” they usually mean they produced two kinds of outputs:

Sentiment classification (labels)

This is the categorical result, positive/negative/neutral, often used for quick dashboards and trend lines.

Sentiment scoring (intensity)

Scoring quantifies the sentiment with a numeric value that reflects intensity. Many workflows include polarity scores and sometimes emotion scores (joy, anger, sadness, etc.).

A good system doesn’t stop there. It also aggregates results across thousands of comments and visualizes them in charts, graphs, and dashboards so the insight is easy to interpret and share.

In a market research analysis context, these outputs become extremely useful when you segment them by:

- time (pre/post campaign launch)

- channel (reviews vs social vs surveys)

- audience (new vs returning customers, plan types, regions)

- product line or offer

- campaign creative, messaging angle, or landing page

- competitor mentions

- topics or aspects (pricing, onboarding, shipping, quality, support)

That’s how you move from “sentiment is down” to “sentiment is down among first-time buyers because delivery expectations are unclear in the ad copy.”

Where BTInsights fits

BTInsights is one of the best AI tools to help market research teams surface sentiment quickly and accurately from both conversation data (e.g. focus group interviews or in-depth interviews) and open-ended survey responses, especially when you need to understand what people felt and why across different channels.

Sentiment from conversation data

With interviews and focus groups, sentiment is most useful when it’s tied to themes and supporting evidence, what people loved, what frustrated them, and what drove hesitation. BTInsights helps you move fast from raw conversation data to structured insights by surfacing patterns across transcripts and making it easy to pull the quotes that support the finding.

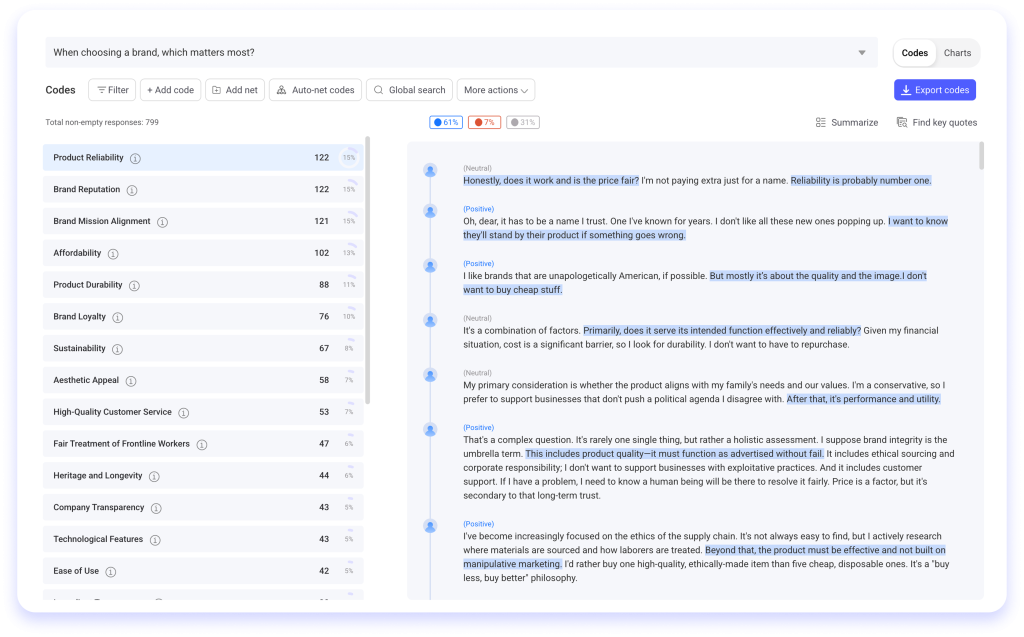

Sentiment from open-ended survey responses (verbatims at scale)

For survey open-ends, BTInsights can help you go from thousands of verbatims to clear drivers by:

- Tagging each response as positive, negative, or neutral

- Coding responses into themes/codes

- Showing the sentiment distribution at the theme/code level (so you can see which drivers skew negative vs positive)

- Supporting review/edit workflows so teams can QA and refine outputs

Types of sentiment analysis

Different market research questions require different types of sentiment analysis. If you pick the wrong type, you’ll either miss the insight, or bury the team in noise.

1) Polarity sentiment analysis (positive / negative / neutral)

This is the baseline “overall feel.” It’s best when you want a high-level temperature check.

2) Fine-grained sentiment analysis (graded intensity)

Fine-grained sentiment breaks sentiment into multiple levels (often mapped to a 0–100 scale, or “very positive” through “very negative”).

Marketers like fine-grained scoring because it makes benchmarks and trend changes clearer than a simple three-label system.

3) Aspect-based sentiment analysis (ABSA)

Aspect-based sentiment analysis connects emotion to a specific topic or feature, for example, how customers feel about pricing, shipping, onboarding, or customer support (instead of only “overall sentiment”).

In market research analysis, ABSA is often the most actionable type because it answers:

“What exactly is driving negative sentiment, and what should we change first?”

4) Intent-based sentiment analysis

Intent-based analysis helps interpret sentiment in the context of market research and the purchase cycle. It can surface signals like “discounts,” “deals,” and “reviews” to identify groups that sound closer to buying, useful for targeting and positioning.

5) Emotion detection (beyond positive/negative)

Emotion detection tries to identify specific emotions (joy, anger, sadness, regret, etc.) rather than just polarity categories.

This is valuable when market research needs nuance, like distinguishing “frustrated but loyal” from “angry and ready to churn.”

Practical shortcut:

If you’re not sure, start with polarity + aspect-based. Polarity gives you trends. Aspect-based tells you why. Add intent or emotion only if the decisions you’re making actually require it.

Sentiment analysis methods: rule-based vs ML vs hybrid

When people search “sentiment analysis methods,” they usually mean the three main approaches:

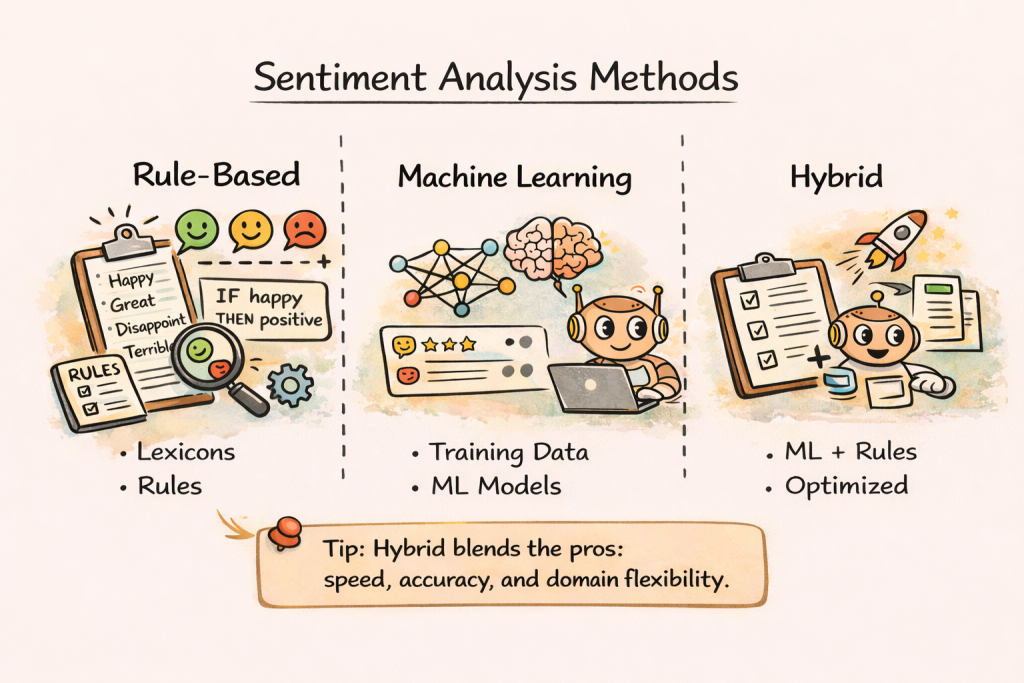

Rule-based sentiment analysis (lexicons + rules)

A rule-based approach uses predefined lexicons, lists of words associated with sentiment, and scoring rules that sum up the emotional weight of the words.

This method is straightforward and transparent, which makes it great for quick pilots. But it can be difficult to scale because language changes constantly and lexicons need continuous expansion.

Machine learning (ML) sentiment analysis

ML approaches train a sentiment analysis model on examples so it can predict sentiment in new text. If trained with sufficient examples, ML can be accurate, but ML models tend to be domain-specific and may need retraining when you switch contexts (for example, moving from market research datasets to social media monitoring).

Hybrid sentiment analysis

Hybrid sentiment analysis combines ML and rule-based techniques to optimize speed and accuracy. It can deliver strong results, but it requires more technical effort to integrate both systems.

Market research-focused takeaway:

If you need a quick brand sentiment dashboard, rule-based can be a fast baseline. If you need reliability across channels and want to reduce false positives/negatives, ML or hybrid usually wins, but only if you validate it and tune it for your domain.

The AI Copilot for interview and survey analysis

Focus Groups – In-Depth Interviews – Surveys

How to Conduct Sentiment Analysis

If you want sentiment analysis that actually helps market research strategy, not just a pretty chart, treat it like a research process, not a button you click once.

Step 1: Define the market research question (and the decision it drives)

Start here, every time. Sentiment analysis should serve a decision.

A strong question is specific and tied to action, like:

- “Did our new campaign improve brand sentiment among first-time buyers?”

- “Which competitor is winning sentiment on pricing, and what phrases drive it?”

- “What aspects of onboarding cause frustration in reviews after the redesign?”

- “Which product claims trigger skepticism or confusion in social comments?”

Then define the outcome: a dashboard, a weekly report, a launch readout, an alerting system, or a research memo.

If the decision is “we’ll change the message if negativity spikes,” your workflow should include thresholds and alerts. If the decision is “we’ll rebuild the onboarding email sequence,” your workflow should emphasize aspect-based insights and example quotes.

Step 2: Choose your data sources (and keep context intact)

For market research analysis, your highest-value sources are typically:

- social media posts/comments (social sentiment analysis)

- online reviews (customer review sentiment analysis)

- survey verbatims (NPS/CSAT, product feedback, exit surveys)

- support interactions (chat logs, ticket notes)

- competitor mentions (forums, review sites, social threads)

Here’s the big rule: don’t strip away context.

If someone answers “The price” in a survey, that could mean totally different things depending on the question prompt. IBM points out that changing the question changes the meaning of the same words, and correcting this often requires keeping original context through pre/post-processing.

So, store metadata alongside text:

- date/time

- channel/source

- campaign or product identifier

- audience segment

- region/language

- for surveys: the exact question prompt

This is how you prevent “false insight” later.

Step 3: Prepare your dataset (cleaning that improves accuracy)

Preprocessing doesn’t need to be complicated, but it does need to be consistent.

At a minimum, do this:

Remove empty responses, spam, and duplicates. Normalize obvious formatting issues. Detect language and route multilingual content appropriately. If you plan to compare regions, don’t rely on translation alone, translation can lose emotional nuance, especially across cultures and tone. (This is one of the biggest reasons global brand sentiment dashboards go wrong.)

When you do use standard NLP preprocessing, know what it does: tokenization, lemmatization, and stop-word removal help reduce noise and improve the model’s ability to focus on meaning.

Step 4: Pick the right type of sentiment analysis for your goal

This is where many teams lose weeks.

If your goal is brand health tracking, polarity + fine-grained scoring is often enough.

If your goal is “what is causing the shift,” you need aspect-based sentiment analysis.

If your goal is market research targeting or messaging by funnel stage, intent-based analysis becomes useful.

If your goal is escalation or crisis management, emotion detection can be valuable because anger and frustration are more urgent signals than mild negativity.

The simplest approach that answers your question is usually the best approach.

Step 5: Choose your method (rule-based, ML, or hybrid)

Now choose how you’ll compute sentiment.

If you’re early-stage or need results quickly, rule-based can give you a baseline fast using lexicons and scoring.

If you have high volume, multiple channels, and your stakeholders will act on results, ML or hybrid tends to be more reliable, as long as you validate it on your domain.

If you’re using a tool (which most teams do), this choice often becomes “which tool approach fits our needs,” but the same logic applies. Tools still embed one of these approaches under the hood.

Step 6: Run the analysis and generate labels/scores/themes

This is where your system produces the actual outputs market research teams can use:

- sentiment labels (positive/neutral/negative)

- sentiment scoring (intensity)

- optional emotion scores (joy/anger/etc.)

- aspect/topic tags (pricing, delivery, onboarding, support, etc.)

- aggregated metrics and dashboards

A market research-ready output should always include examples, real text snippets that explain the trend. Otherwise, you’ll have a debate about whether the dashboard is “right,” instead of a discussion about what to do next.

A good practice is to maintain a “Top Drivers” view:

- Which aspects contribute the most to negative sentiment this week?

- Which phrases appear most frequently in positive mentions?

- What changed versus last week or pre-launch?

That’s how you turn sentiment analysis into a market research intelligence asset.

Step 7: Validate the results

This step is non-negotiable.

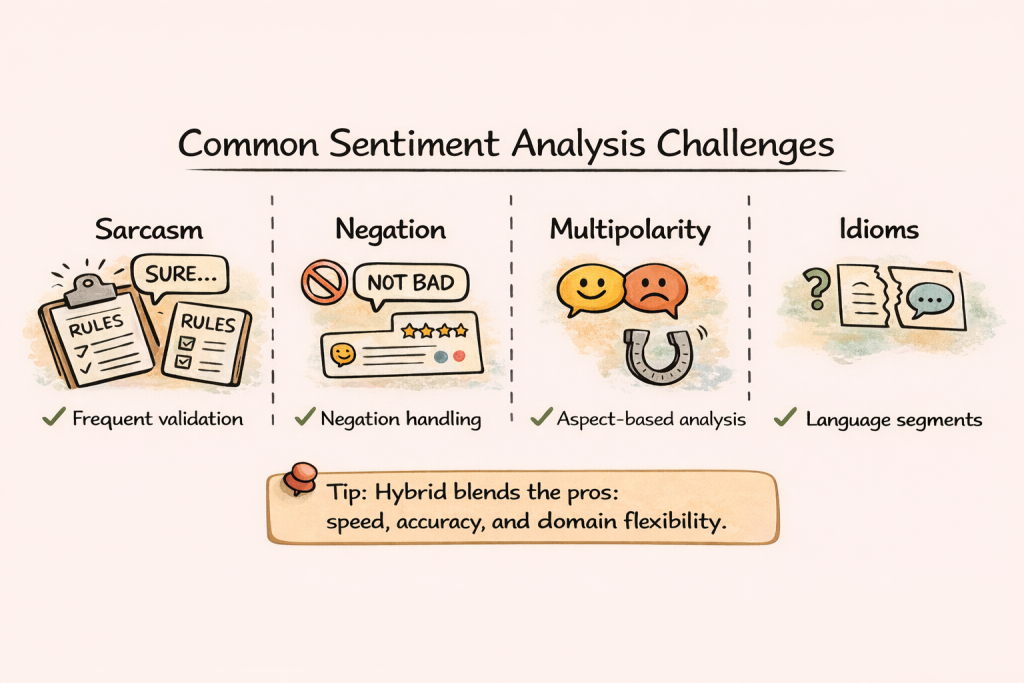

Even modern NLP struggles with the parts of language humans handle effortlessly. AWS highlights three classic challenges: sarcasm, negation, and multipolarity (mixed sentiment in one sentence).

IBM adds practical examples of why sarcasm, idioms, and context confuse sentiment analysis tools.

Validation doesn’t need to be heavy, but it must be real:

Pick a sample of comments from each key segment (channel, region, product line) and manually label them. Compare manual labels to model outputs. Track where the model fails. Then adjust your system, by refining lexicons/rules, tuning thresholds, or retraining.

The goal isn’t perfection. The goal is trustworthy directional insight that is stable enough for decision-making.

Step 8: Translate insights into market research actions

Sentiment analysis is only valuable when it changes what you do.

Here’s a simple “market research action” framework that keeps teams aligned:

What changed?

Describe the change in sentiment and volume.

Why did it change?

Show the aspects/topics and example quotes driving the movement.

Where did it change?

Identify the channel and audience segment where the shift happened.

What should we do next?

List the next actions: message tweaks, FAQ updates, landing page clarification, influencer response, customer support script changes, product feedback loop.

This is where sentiment analysis becomes a strategy tool, not a reporting exercise.

Step 9: Operationalize it (cadence, alerts, and feedback loops)

If sentiment analysis matters, it shouldn’t be a one-time project.

You want a consistent cadence, weekly or biweekly, and an alerting system for spikes in negative sentiment, especially around campaigns and launches. Some guides recommend real-time alerts for negative sentiment spikes, plus a human-in-the-loop review to keep quality high.

Operationalizing sentiment analysis usually includes:

- clear ownership (who maintains the dashboard, who reviews errors)

- defined metrics (net sentiment, negative rate, aspect sentiment)

- thresholds (what counts as a meaningful drop)

- review rhythm (weekly check-in, monthly deep-dive)

- a feedback loop (market research + product + support alignment)

The more your organization trusts the system, the more you can use it for faster decisions.

Three common workflows that work

Let’s make this real and practical.

Workflow 1: Social media sentiment analysis for campaign performance

Your goal is to track the public response to a campaign in near real time.

Start with a time window (pre-launch baseline vs post-launch). Track volume and sentiment. Segment by platform. Pull out top negative themes and top positive phrases. If sentiment drops, compare what changed: creative variant, influencer post, comment thread, or press event.

This mirrors how marketers track conversations on social platforms and adjust campaigns when net sentiment falls short.

Workflow 2: Survey sentiment analysis for VoC and message clarity

Your goal is to understand “why” behind scores like NPS/CSAT.

Run sentiment analysis on open-text responses. Then run aspect-based sentiment to connect emotion to topics like pricing, onboarding, customer support, speed, trust, and ease of use.

This workflow is especially powerful when you tie the sentiment back to the survey question prompt, because context changes meaning.

Workflow 3: Review sentiment analysis for positioning and product claims

Your goal is to understand what customers praise and what frustrates them, and use that to refine positioning.

Reviews are naturally rich in emotional language. Aspect-based sentiment analysis often reveals surprising market research insights, like “people love the design but hate the speed” or “they praise quality but complain about delivery.”

This is where sentiment analysis becomes a direct input into messaging, landing page copy, and FAQs.

Common challenges and how to handle them

If you publish a sentiment analysis guide on a market research analysis website, readers expect you to address the hard parts. These are the issues that break dashboards and create wrong decisions.

Sarcasm and irony

Sarcasm is notoriously hard for machines because the words look positive even when the meaning is negative. AWS and IBM both call this out with examples like “Yeah, great…” or “Awesome… just what I need.”

How to reduce the risk: validate social channels more frequently, keep a sarcasm watchlist, and make sure you include example quotes in reports so humans can sanity-check.

Negation

Negation flips meaning and can throw off simple systems (“I wouldn’t say it was expensive”).

How to reduce the risk: use models/rules that handle negation patterns, and QA segments where negation is common (support tickets and long-form reviews).

Multipolarity (mixed sentiment in a single sentence)

People often say “I love the product, but support is slow.” That’s mixed sentiment.

How to reduce the risk: use aspect-based sentiment analysis. Polarity alone blurs the truth.

Idioms and cultural language

Idioms can confuse models (“break a leg,” “beat around the bush”).

How to reduce the risk: validate on your audience language and keep region/language segmentation.

Context loss (especially in survey responses)

Short answers become ambiguous without the question prompt.

How to reduce the risk: analyze question + response together, or keep them connected in the dataset.

Build vs buy: do you need a tool, a model, or both?

Many teams reach a fork in the road: build a sentiment analysis solution with open-source libraries or buy a SaaS sentiment analysis tool.

IBM frames it as open source vs SaaS: building often requires a considerable investment in engineers and data scientists, while SaaS requires less initial investment and lets you deploy a pre-trained model quickly.

For a market research analysis audience, the most honest guidance is:

- If you need fast results and don’t have ML resources, start with a tool.

- If sentiment analysis becomes core to your product or your competitive advantage, consider building or customizing (often a hybrid approach).

- If accuracy matters for high-stakes decisions (crisis comms, compliance), invest more heavily in validation and domain tuning, regardless of build vs buy.

You don’t win by choosing “build” or “buy.” You win by choosing the option you can maintain and trust.

What to include in your final report

A sentiment report that gets ignored usually has one problem: it shows sentiment numbers but doesn’t explain why.

A sentiment report that gets used is structured like a narrative:

Start with the trend. Explain the drivers. Prove it with examples. Then recommend action.

A good market research analysis deliverable typically includes:

- sentiment trend over time (and volume, so you see scale)

- net sentiment or negative rate

- top positive and negative aspects

- top phrases/keywords driving each shift

- a small set of representative quotes

- recommended next actions (message tweaks, targeting changes, FAQ updates, response plan)

FAQ: Sentiment analysis (quick answers people search for)

What is sentiment analysis in market research?

Sentiment analysis in market research is the process of analyzing customer feedback, reviews, and social conversations to measure positive, negative, or neutral sentiment, then using those insights to improve brand messaging, campaign performance, and customer experience.

How do you conduct sentiment analysis?

A practical process is: define the market research question, choose data sources, clean the dataset, select sentiment type (polarity/aspect/intent/emotion), choose a method (rule-based/ML/hybrid), run analysis, validate results, and operationalize reporting and alerts.

What are the main types of sentiment analysis?

Common types include polarity, fine-grained scoring, aspect-based sentiment analysis, intent-based analysis, and emotion detection.

What is aspect-based sentiment analysis?

Aspect-based sentiment analysis (ABSA) connects sentiment to a specific aspect of a product or experience, like pricing, onboarding, shipping, or support, so you can identify what’s driving positive or negative reactions.

What are the biggest limitations of sentiment analysis?

The most common limitations include sarcasm, negation, mixed sentiment (multipolarity), idioms, and losing context, especially when short responses are separated from their prompts.

Do I need machine learning to do sentiment analysis?

Not always. Rule-based sentiment analysis can work as a baseline, but ML or hybrid approaches can improve accuracy when you validate and tune the system for your domain.

The AI Copilot for interview and survey analysis

Focus Groups – In-Depth Interviews – Surveys